Dr. Adam Brentnall is a Statistician from Barts Clinical Trials Unit, Wolfson Institute of Population Health, Queen Mary University of London (QMUL). He also works closely with the CRUK and QMUL Cancer Prevention Trials Unit. He is currently a member of the UK National Screening Committee research and Methodology group, and the CRUK Expert Review Panel on Early Detection & Diagnosis Trials, Behavioural, Health Systems and Health Economics Research. He recently served as statistician on a National Institute of Clinical Excellence (NICE) committee for guidelines on identifying and managing familial and genetic risk for ovarian cancer. His collaborative research has involved the design and analysis of clinical trials, epidemiological studies, and laboratory studies to evaluate cancer prevention and early detection interventions, and statistical methodology driven by these studies.

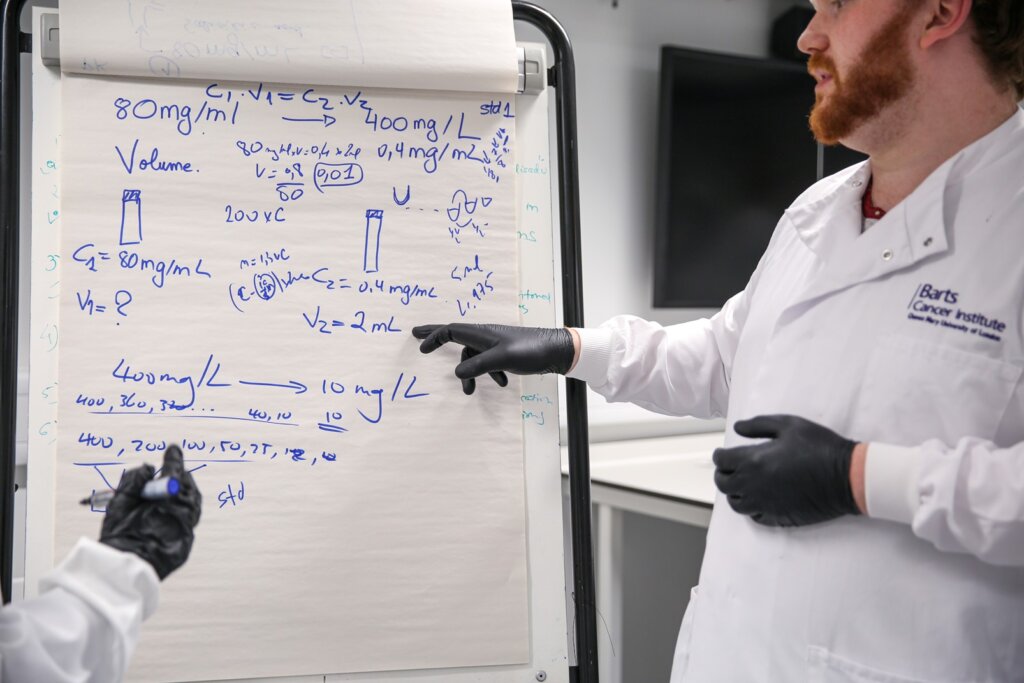

Each autumn I introduce MSc students at Barts Cancer Institute to research methods statistics, including the importance of sample size.

I emphasize why all researchers should think about their study design and sample size before they carry out research.

The issue of sample size is related to research waste. Studies that are too small to answer the research question are flawed, unreliable and constitute research waste. Studies that are too large are also research waste. They use an unnecessary amount of research funding, healthcare resources, participant time and exposure to potential risk. With a large trial we may conclude that the intervention does not work but at the expense of investigating other interventions that may actually work.

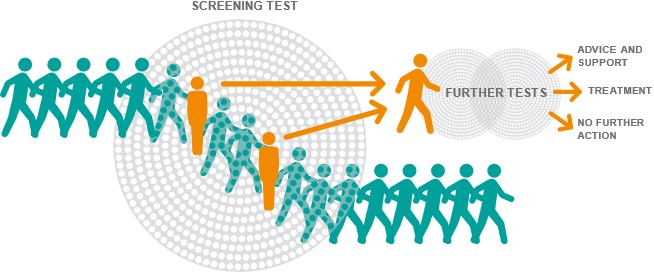

Investigating whether cancer screening helps people live longer needs many volunteers to take part in research. This is because, by its nature, relatively few of those screened may directly benefit from screening. For example, in breast screening in the UK, for every 1,000 women who attend their mammogram screening appointment, approximately 40 will be recalled for further investigation. Of these approximately 8 will be found to have cancer. In other words, only these 8 from the 1,000 screened may have their life extended by early cancer detection.

It is also worth noting that the 40 who test positive are at greatest risk of potential harms from screening. For example, those with a positive screen, might undergo cancer treatment which they would not have had if they had not been screened. That is, without screening, they would have died before the cancer became symptomatic and was detected i.e. the cancer would not have been their cause of death (this is called overdiagnosis). There will also be some that after further investigation are found not to have cancer, and they will experience anxiety.

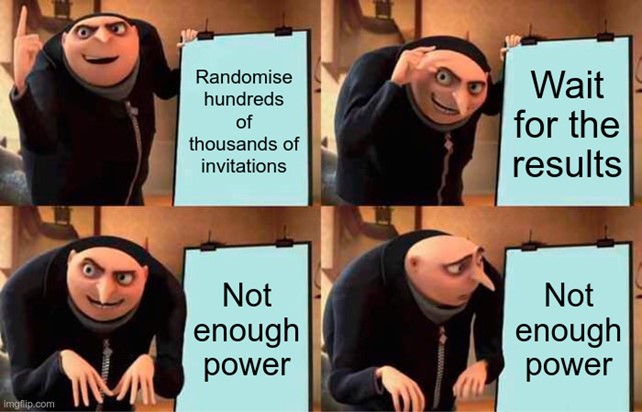

Given the scale of the study population needed for screening trials it is critical to get trial design right. Some previous studies have randomised the offer of screening. For example, by sending some people an invitation letter to be screened as part of a trial, some people no invitation, and comparing the two randomised groups. This method can be tremendously inefficient compared with other options. For example, suppose half of those who are invited take up the offer (an optimistic assumption for uptake of an unproven test). Then one would need to randomise at least four times as many people to be screened as a traditional design where randomisation is done after participant consent. This means the trial requires a budget for screening costs that is twice (4 x 0.5) that of design with randomisation after consent, as well as twice the clinic capacity, twice the number of volunteers who will receive the intervention and risk potential harms, and so on. Another issue is that one does not know how many will volunteer to take part, which might make the whole exercise underpowered and therefore flawed.

In many circumstances this design will lead to research waste. A recent commentary considered in more detail why this approach is often misguided for other reasons.

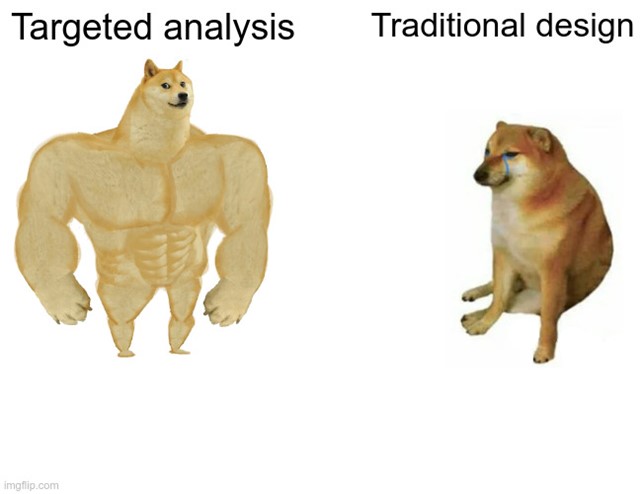

The main problem with randomisation of the offer of screening is that many people invited to screening will not take up the offer and not be screened. This group cannot help us understand if screening extends lives because they are not screened. But, as we identified above, those who are screened but test negative also do not help us understand if screening extends lives, because they cannot benefit from early cancer detection either. It would be ideal if we could target analysis to those who test positive. For instance, by counting the number of test positive deaths by randomised group. When feasible, this targeted analysis could dramatically reduce the sample size compared with a traditional analysis to evaluate potential benefits and harms.

In the past it has been difficult to use a targeted approach. For some screening tests it is unethical to apply the screening test in the control arm, either retrospectively, or prospectively without informing the trial participant of the result. This is most clear for imaging screening tests such as mammography for breast cancer screening, or low-dose CT for lung cancer screening. Here it would impractical and unethical to use an imaging method in the control group, identify cancer, and then do nothing about it.

The good news is that the targeted approach is likely to be feasible for non-imaging screening tests. For example, those that use biological samples. For retrospective testing the key is that testing is divorced from taking the sample. This idea will likely be very important for trials of new multi-cancer early detection (MCED) blood sample tests. Indeed the targeted approach is planned to be used in the NHS-Galleri trial of a blood-based multi-cancer test. The idea can also be used when samples may be tested prospectively. Here, potential examples include blood samples tested for PSA for prostate cancer screening; even poo samples for colorectal cancer screening. Such analysis will provide insight into causal mechanisms, and data on a range of thresholds to define screening test positivity.

We recently examined sample size requirements for two targeted approaches using retrospective testing. Both rely on storing or testing samples in the control group in a biobank.

In one approach the trialists test everyone at the time of analysis; in the other just those with the endpoint of interest (e.g. death from cancer).

Both approaches have merit. We identified that choice between the two methods may depend on the reduction in the trial size by testing everyone, versus the cost of testing all samples in the control group. However, whichever targeted analysis is chosen, in many realistic scenarios, using these designs will lead to much smaller cancer screening trials than the traditional approach.

In conclusion, use of targeted design and analysis for cancer screening trials is likely to reduce research waste, and should be considered more often by those running trials. There are some drawbacks and disadvantages with these designs discussed in our paper – they will not always be the best choice. However, in many circumstances, targeted design and analyses has a smaller sample size requirement than traditional designs, and will help funders enjoy more success from the research landscape they support.

The views expressed are those of the authors. Posting of the blog does not signify that the Cancer Prevention Group endorses those views or opinions.